rsync (Everyone seems to like -z, but it is much slower for me)

- a: archive mode - rescursive, preserves owner, preserves permissions, preserves modification times, preserves group, copies symlinks as symlinks, preserves device files.

- H: preserves hard-links

- A: preserves ACLs

- X: preserves extended attributes

- x: don't cross file-system boundaries

- v: increase verbosity

- --numeric-ds: don't map uid/gid values by user/group name

- --delete: delete extraneous files from dest dirs (differential clean-up during sync)

- --progress: show progress during transfer

ssh

- T: turn off pseudo-tty to decrease cpu load on destination.

- c arcfour: use the weakest but fastest SSH encryption. Must specify "Ciphers arcfour" in sshd_config on destination.

- o Compression=no: Turn off SSH compression.

- x: turn off X forwarding if it is on by default.

Original

rsync -aHAXxv --numeric-ids --delete --progress -e "ssh -T -c arcfour -o Compression=no -x" user@<source>:<source_dir> <dest_dir>Flip

rsync -aHAXxv --numeric-ids --delete --progress -e "ssh -T -c arcfour -o Compression=no -x" [source_dir] [dest_host:/dest_dir]

This was such a great post to find!

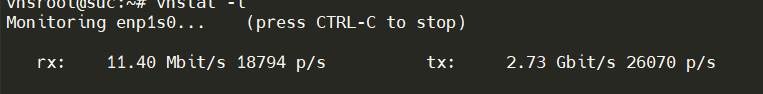

I was set to leave my transfer going at 5-10MB/s but couldn't go to sleep with 1.2TB going for 30hours!

(This was also from Synology NAS to MacOS)

As others mentioned early on in this post using specific SSH options can affect the transfer rate dramatically: -e "ssh -T -c aes128-ctr -o Compression=no -x". Primarily the Compression factor. I couldn't see notable differences and didn't test more than comparing to "-c [email protected]" but got variably up to 50-90MB/sec.

DO use --dry-run and --itemize-changes which is a great record of what is actually going to happen.

Always be careful of SOURCE and DESTINATION.

Pause and think, before setting things in motion!

If you want a little help managing a collection of commands you run and an environment conducive to setting up rsync command lines you could try (on a Mac) RsyncOSX as a GUI front end (although I still prefer to run the actual command in a standalone terminal.)